Good Monday Morning

It’s June 27th. Have a great and safe long weekend. And please keep our pet friends in mind by not using fireworks in residential neighborhoods. Some dogs and cats undoubtedly love them, but not the ones I’ve spent my time with.

Today’s Spotlight is 1,394 words — about 5 minutes to read.

News To Know Now

Quoted:“We are not yet sure how companies may respond to law enforcement requests for any abortion related data, and you may not have much control over their choices. But you can do a lot to control who you are giving your information to, what kind of data they get, and how it might be connected to the rest of your digital life.“

— The Electronic Frontier Foundation in its “Security and Privacy Tips for People Seeking An Abortion.” The org also covers protests and offers great general advice as well as some unique takes–such as using different browsers.

a) Amazon showed off voice synthesizing software that can emulate the speech of a specific person. That brings voice fakes to a whole new level and managed to creep out the internet during a demo by showing a fictional deceased grandmother reading a bedtime story.

b) Microsoft is taking automated software in a different direction after announcing that its facial recognition programs will no longer predict a person’s gender, age, or emotional state. It’s unclear to me how principled that stand is coming on the heels of Facebook announcing that it will test facial recognition to verify a user’s age when they set up a new Instagram account.

c) U.S. companies would be forbidden from selling or transferring location and health data for a period of ten years under legislation proposed by Sen. Elizabeth Warren (D-MA). The legislation has the backing of progressive Democratic Party senators, but is unlikely to pass the Senate. Several dozen major companies sell location and health data in addition to thousands more that use it for marketing and demographics.

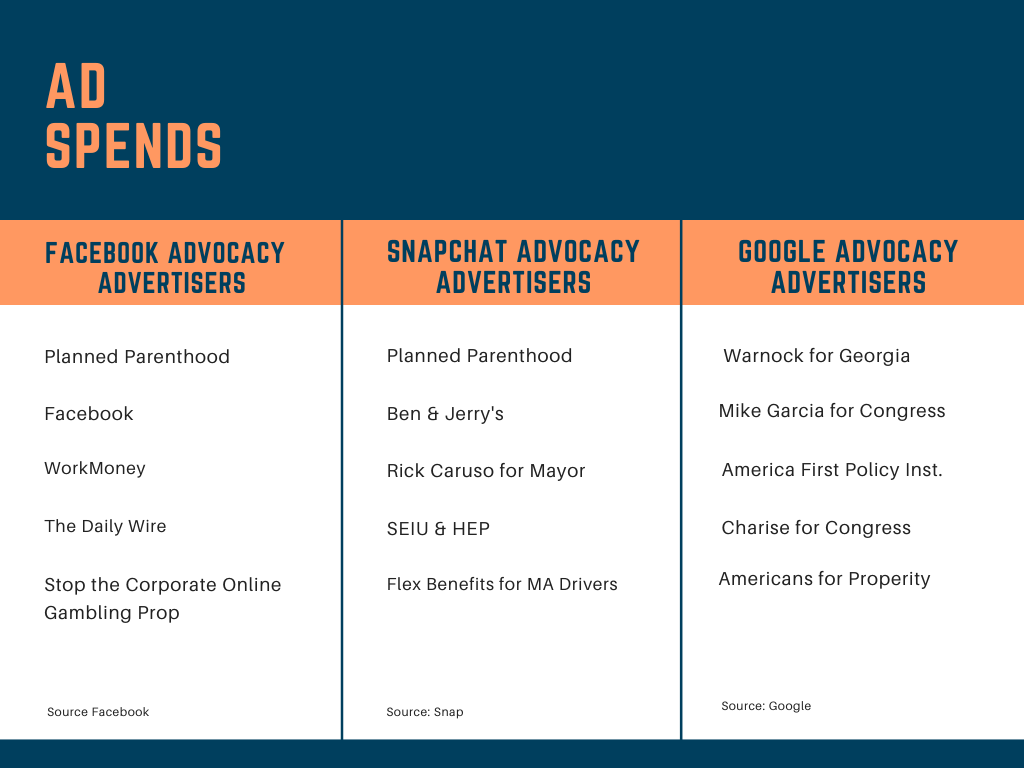

Trends & Spends

Spotlight Explainer — Police Data Tracking Grows, Hidden Cameras & Fake Social Media In Constant Use

Our 4th annual Police Technology report shows that the trends we saw in previous years have become best practices. Rather than monitoring social media, for example, police are using false profiles to surveil people. Police also continue to buy facial recognition data, location information, and behavioral data.

All of this gets mashed together in a series of predictive models that inform personnel and equipment allocations and can even create surveillance assignments.

Location Data Use Is Prevalent

Imagine all the ways technology tracks you from your vehicle to your phone to your online activity or in millions of doorbell, traffic cameras, and location data records that have become widespread in police technology. The real question is trying to determine when you are not being tracked. Smart TV and other home devices not only report on our use, but establish our presence at a physical address. So do automated toll booth systems, smartwatches, and fitness trackers.

There are times when that is great. Court documents show that dozens of people arrested for breaking into and ransacking the Capitol had their whereabouts pinpointed to specific areas during that attack, but sometimes the data’s use is unclear.

The FBI last year conducted more than 3.4 million searches of private data that the NSA had compiled, according to the Wall Street Journal. No one alleges that the searches were illegal or improper, only that millions of searches is a lot of data.

It’s not only government searches that are turned over. For a 6 month period in 2020, Facebook, Google, Apple, and Microsoft received more than 100,00 subpoenas for data.

Police Tech Data Piles Up & Is Sometimes Lost

You probably have lots of data on your computer, phone, and other devices that incorporates everything from screenshots to passwords and text messages. With all that data piling up, it’s not hard to imagine that there are inadvertent releases and leaks.

We learned last Thanksgiving that helicopter surveillance data from Texas and Georgia was leaked online. In the last year alone, police in New York and Boston have come under fire for buying surveillance technology without public oversight. The equipment they’ve purchased includes “cell tower simulators” used to find missing people or criminals by emulating a cell tower signal. Police acknowledge that all the telephones in that area might also connect to them, but say they ordinarily dispose of that data.

Some experts are concerned about a new trend for 911 calling that allows phone companies to relay phone calls, video, and text into emergency call centers. Along with that rich media data, phones can also transmit full GPS coordinates and vertical information, speed information, and potentially anything else on that device.

Police Tech Goes Social

A report published this spring about the Minneapolis Police Department alleged, “MPD officers used covert, or fake, social media accounts to surveil and engage Black individuals, Black organizations, and elected officials unrelated to criminal activity, without a public safety objective.”

MIT Technology Review expanded on that by reporting that “officers kept at least three watch lists of people present at and around protests related to race and policing. Nine state and local policing groups were part of a multiagency response program called Operation Safety Net, which worked in concert with the Federal Bureau of Investigation and the US Department of Homeland Security to acquire surveillance tools [and] compile data sets … during racial protests in the state.”

Police in Los Angeles are doing the same according to an earlier expose by The Brennan Center. Their reporting included many horrifying documents including a list that triggered surveillance and included the phrases “#BlackLivesMatter,” “#fuckdonaldtrump,” and the names Tamir Rice and Sandra Bland. Rice and Bland were black Americans killed by police or while in custody.

Police Tech Predicting Crime

All of this data often gets regurgitated back to law enforcement agencies in the form of predictions about crime in particular areas. The Markup and Gizmodo analyzed five million of those predictions and found incredible amounts of race bias in the results. In multiple cities, neighborhoods made up of mostly white residents had predicted crime volume that was orders of magnitude lower than nearby majority nonwhite neighborhoods. In the case of the Los Angeles data, reporters found that the agencies had inadvertently published millions of predictions.

Researchers suggest that the racial bias might be due to disparities in the number of arrests of nonwhite people. They also suggest that any data used to predict the population can’t have that bias and still be accurate. And when those predictions are combined with personal data, they can create a relative score that suggests a person will be involved with crime — either as victim or criminal, but not reliably assess which.

Still interested? Have a look at our 2021 police tech roundup.

Did That Really Happen? — TED Talk Did Not Endorse Pedophilia

The AP debunked an old hoax that resurfaced this week with a doctored graphic claiming that a TED talk endorsed pedophilia as a natural orientation. Snopes debunked the same graphic four years ago. I wish people would stop doing this.

Following Up — Advertisers Can’t Use Facebook to Discriminate

Although actual ad agencies know and understand what we can’t advertise in discriminatory ways such as excluding age or racial groups from seeing ads related to housing, jobs, and other areas, some people kept doing just that.

Facebook is revising its systems again to settle a Justice Dept. action and prohibit that sort of targeting.

Protip — What Do Cookie Preferences Really Mean?

After first inserting your Oreo or Newton joke here, head over to Wired and learn about cookies from Lou Montulli, the software engineer who invented them 18 years ago.

Screening Room – Meta

Science Fiction World — Shirts that Monitor Hearts

A little heavier than a shirt, but lighter than a jacket is how engineers describe clothing made of special fibers that detect and convert the sounds of a heartbeat into an electrical signal. There are endless possibilities for acoustic fiber beyond personal cardiac monitoring, including monitoring sea life, detecting fetal heartbeats, and of course, answering phone calls.

Coffee Break — Noisy Cities

A startup focused on improving traffic pollution has mapped out the relative sounds of individual streets in Paris, London, and New York. Have a listen here.

Sign of the Times