Good Monday Morning

The Supreme Court returns today on this first Monday in October.

Today’s Spotlight is 1,457 words, about 8 minutes to read.

3 Headlines to Know Now

Apple Pulls ICE-Tracking App After DOJ Demand

Apple removed the crowdsourced ICEBlock app, which let users report ICE agent activity, after the Justice Department said it endangered officers.

UK to Mandate Digital IDs for All Workers

Prime Minister Keir Starmer announced plans to require digital IDs to work in the UK by 2029, calling it a tool against illegal employment while critics warn of privacy risks and government overreach.

ICE Plans 24/7 Social Media Surveillance Operation

Immigration and Customs Enforcement is seeking contractors to monitor social media around the clock and feed intelligence into deportation databases, raising major privacy and civil liberties concerns.

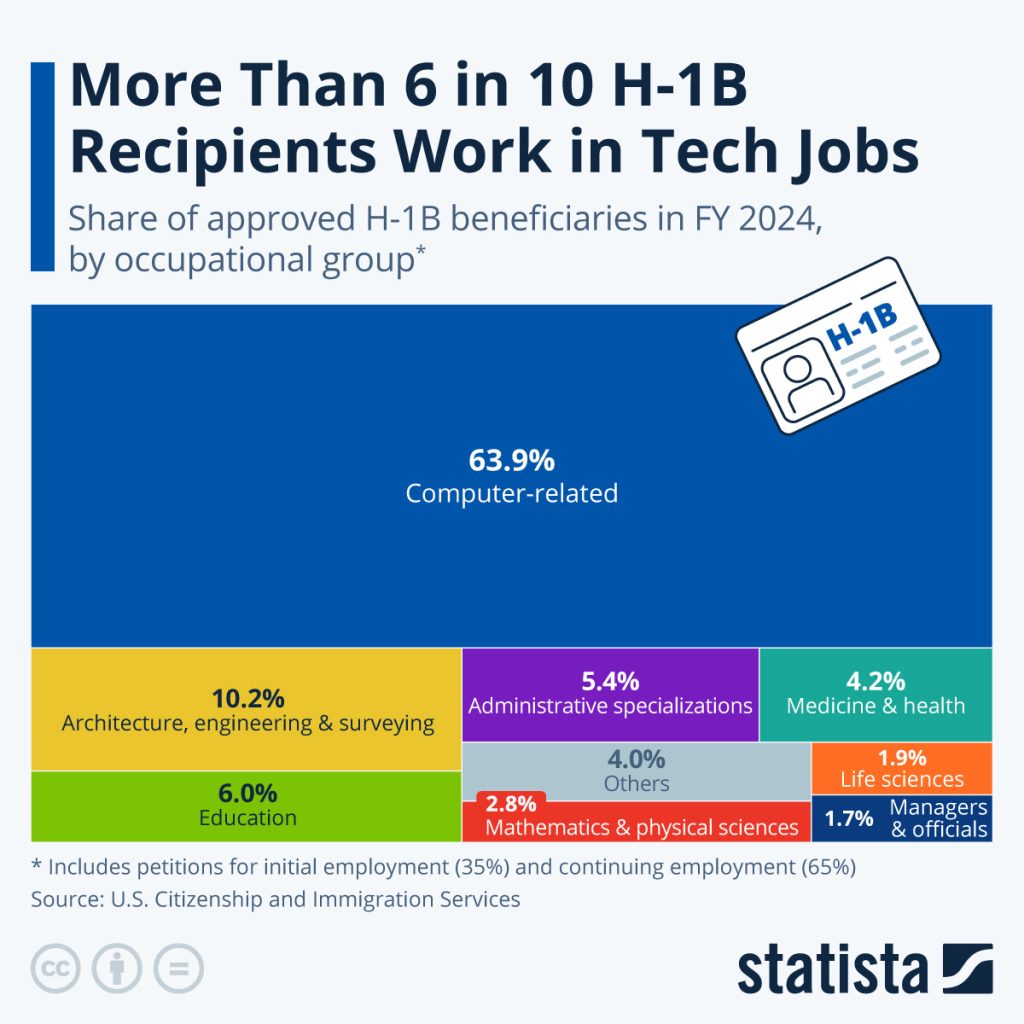

Exorbitant H-1B Fees

By The Numbers

George’s Data Take

More than 30 percent of H-1B visas go to non-technical roles, yet they’ll pay the same new $100,000 fee.

The policy sounds like a crackdown, but it kneecaps U.S. competitiveness by pushing skilled workers to other countries or by raising costs for employers who already play by the rules.

California Fines Lawyer 10K For ChatGPT Fake Information

Running Your Business

A state appeals court found 21 of 23 citations in a lawyer’s brief were made up by ChatGPT and fined him $10,000, warning that no paper filed in any court should contain material the attorney hasn’t personally read and verified.

Silver Beacon Behind The Scenes

This isn’t only a legal issue. Business leaders should follow suit by updating codes of conduct and making clear that while employees can use AI for editing, polishing their work, or verifying information, submitting work generated solely by it carries consequences.

Creating Deepfakes is Now Fast and Easy

Spotlight

Last Tuesday, OpenAI released Sora 2, a video generator that requires only a short text prompt to create a video. An entire AI deepfake can be created in less than an hour.

We’ve reached the “Synthetic Reality Threshold” where most humans can no longer distinguish authentic media from AI creations without technological help. A UNESCO analysis finds that 8 million deepfakes will be shared in 2025, up from just 500,000 in 2023. Europol predicts that 90% of online content may be generated synthetically by 2026.

So how do we function when we can’t trust what we see and hear?

What Do We Do About the Lack of Trust?

Researchers call this the “liar’s dividend.”

That UNESCO analysis defines it as “the ability to dismiss authentic recordings as probable fakes.”

Here’s how it works: In July 2025, Director of National Intelligence Tulsi Gabbard made allegations against former President Obama. Three days later, Trump posted this fabricated video of FBI agents arresting Obama in the Oval Office.

This is the liar’s dividend in action: flood the zone with synthetic content to redirect attention, create confusion, and make it harder to know what’s real.

Trump made this strategy explicit in a 2018 interview with CBS’ Lesley Sthal, admitting he bashes the press to discredit them so “when you write negative stories about me, no one will believe you.

The liar’s dividend threatens you two ways: scammers targeting your wallet and family and political officials undermining democratic institutions.

Personal Threats: Scams and Extortion

The FBI reports that deepfake complaints more than doubled this year, with financial losses nearly tripling.

- Voice cloning scams mimic loved ones in fake emergencies

- Corporate fraud uses fake video calls with executives, and one company lost $25 million.

- Deepfaked doctors promoting dangerous products

- Meta profited nearly $49 million in ads with fabricated videos of Senators Warren and Sanders promising nonexistent government rebates

Political Threats: Social Manipulation

Governments worldwide also use deepfakes.

- Russia posted a deepfake of Ukraine’s President Zelenskyy urging surrender.

- Turkey’s Erdogan used deepfakes to link opponents to terrorists.

- Malaysia, Belgium, and the UK have dealt with deepfakes targeting political leaders

In the U.S., Trump routinely posts fabricated videos. Last month, he shared a fake news segment touting a “medbed,” part of QAnon conspiracy theories, blurring synthetic media with fringe propaganda.

Many of his videos are cartoonishly obvious. But the volume matters more than the quality. Flooding the zone with fakes, even obvious ones, exhausts fact-checkers and erodes trust in all media.

Individuals Can’t Win the Tech Race

Detection tools lag behind creation technologies in an unwinnable arms race. One cybersecurity CEO said “We’ve entered an era where anyone with a laptop and access to an open-source model can convincingly impersonate a real person.”

So we can’t reliably spot fakes, but we’re not helpless. Human discernment and practical strategies can still protect us.

Family Protections

Two Strategies can protect you from personal scams:

1. Create a family codeword. Use a simple random word that everyone in the family knows and can easily remember. Tie it to a beloved family memory or inside joke that isn’t part of your social media profiles. When someone calls claiming to be in trouble, ask for the codeword. Voice cloning can’t fake what it doesn’t know.

2. Use a “prove you’re live” challenge. On video calls, ask the person to perform a sudden physical action like turning their head sharply to the side or touching their nose. Real-time deepfakes often glitch when forced to respond to unexpected movements.

Political and Social Protections

Three practices can help you navigate manipulated political content:

1. Avoid hot takes. Employ pauses. When you see surprising content, pause before reacting or sharing. Your amplification signals to platforms that the content is important, helping it spread. Take a breath. Wait.

2. Verify through multiple reliable sources. Even when a video is posted by a world leader, verify its authenticity. Check multiple news sources that have both the detection technology to identify fakes and the reporting resources to investigate them. Avoid relying solely on aggregators or social media.

3. Watch for the liar’s dividend in action. When politicians or officials claim real evidence is fake, be especially alert. Look for confirmation from multiple competing sources. Remember Trump’s admission to Lesley Stahl about discrediting the press preemptively so no one will believe negative stories.

Understanding How to Know

We’re no longer in a technology battle. This is a challenge for all people to assess how they consume knowledge and apply discernment to determine whether to trust that knowledge.

As UNESCO researchers note, “AI literacy isn’t just about using AI tools—it’s about surviving in an AI-mediated reality where seeing and hearing are no longer believing.”

Wishing this away won’t change anything. Understanding how to process new information in the 21st century and incorporate that into your own beliefs is the biggest challenge facing us.

Meta Will Use Your AI Chats to Sell Data

Practical AI

Meta will mine user conversations with its AI tools to target ads on Facebook and Instagram, with no way to opt out. One more warning about Meta in particular: if you don’t pay, you are the product.

Windows 10 Users Will get One Extra Year of Support

Protip

Microsoft will let Windows 10 users enroll for free extended security updates through 2026, giving businesses and holdouts more time before upgrading to Windows 11.

Viral Story of Chihuahua Joining Wolf Pack is False

Debunking Junk

Snopes confirmed that viral images of a Chihuahua running with wolves near Ely, Minnesota were AI-generated and edited, not real wildlife photos. And the bunnies weren’t on the trampoline either. Why make these deep fakes? Traffic & ad revenue sharing.

You should have known it was fake because most chihuahuas would scare the wolves.

Open Enrollment Season is Here

Screening Room

Handheld 3D Printer Repairs Bone Like a Glue Gun

Science Fiction World

Researchers from Korea and the U.S. built a portable 3D printer that deposits bone-like material directly into fractures, healing defects in rabbits and paving the way for custom bone repair in surgery.

Mariner’s Search For a Good Deed Goes Viral

Tech For Good

After a fan gave Cal Raleigh’s 60th home run ball to a child, the Mariners’ comms manager took to social media asking for help finding “this incredible fan.” The online plea worked! Glenn (only gave his first name) was found, met the superstar, and showed that kindness can trend, too.

Angelfire Still Lives. And it’s Still Owned By Lycos

Coffee Break

Visit Memory Lane with the Angelfire site builder that promises “No need to learn HTML or fancy coding.”

The graphics are still Angelfire pure. And since you’re undoubtedly wondering, 1994 for Lycos and 1996 for Angelfire.

Sign of the Times